Exact Inference¶

Suggested Reading¶

- Murphy: Chapter 20

- MacKay: Chapter 21.1 (worked example with numbers)

- MacKay: Chapter 16 (Message Passing, including soldier intuition)

- MacKay: Chapter 26 Exact Inference on Factor Graphs

Overview¶

- Variable elimination (VE)

- Complexity of VE

Inference as Conditional Distribution¶

In this lecture, we will explore inference in probabilistic graphical models (PGM).

Let

where \(X_R\) is the set of random variables in our model that are neither part of the query nor the evidence. For inference we will marginalize out these extraneous variables, focussing on the joint distribution over evidence and subject of inference:

In particular, for inference will focus computing the conditional probability distribution

Note that the conditional distributions can be computed by marginalizing over all the other variables, including extraneous, in our model's joint distribution.

The subject of this lecture will be concerned with how to efficiently marginalize over all variables.

We will see that the order which variables are marginalized over can considerably affect the computational cost, and doing the marginalization naively can incur an exponential cost in the number of random variables.

Variable elimination¶

- A simple and general exact inference algorithm in any probabilistic graphical model (though we focus on Directed Acyclic Graphical models).

- Has computational complexity that depends on the graph structure of the model. We saw last lecture that the graph structure corresponds to independence assumptions encoded into the model.

- Can use dynamic programming to avoid enumerating all variable assignments.

Simple Example: Chain¶

Lets start with the example of a simple chain

where we want to compute \(P(D)\), with no observations for other variables.

We have

We saw last lecture that this graphical model describes the factorization of the joint distribution as:

If the goal is to compute the marginal distribution \(p(D)\) with no observed variables then we marginalize over all variables but \(D\):

However, if we do this sum naively, it will be exponential \(O(k^n)\):

In particular, we are summing over the elements of \(A\) for every term in the sum over elements in \(B\) .

Instead, if we choose a different order for the sums, or elimination ordering:

we reduce the complexity by first computing terms that appear across the other marginalization sums.

So, by using dynamic programming to do the computation inside out instead of outside in, we have done inference over the joint distribution represented by the chain without generating it explicitly. The cost of performing inference on the chain in this manner is \(\mathcal O(nk^2)\). In comparison, generating the full joint distribution and marginalizing over it has complexity \(\mathcal O(k^n)\)!

Tip

See slide 7-13 for a full derivation of this example, including the runtime \(\mathcal O(nk^2)\).

Simple Example: DGM¶

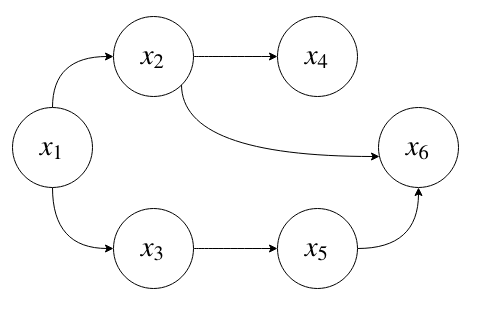

Lets take the DGM we saw in lecture 3

To answer the inference question, observing the state of a random variable \(X_6 = \bar x_6\), what is \(p(X_1 | \bar x_6)\)?

Note

The \(\bar x\) denotes that the variable is observed.

First, recall that the above graphical model describes a factorization of the joint distribution encoding independence between variables:

Where the random variables under the joint distribution are inferred, observed, or marginalized respectively:

and

to compute \(p(x_1, \bar x_6)\), we use variable elimination

Note that \(p(\bar x_6 | x_2, x_3)\) does not need to participate in \(\sum_{x_4}\).

Finally,

So, we've seen that the complexity of variable elimination is related to the elimination ordering. Unfortunately, finding the best elimination ordering is NP-hard. Though, there are some heuristics.

Intermediate Factors¶

In the above examples, each time we eliminated a variable it resulted in a new conditional or marginal distribution. However, in general eliminating does not produce a valid marginal or conditional distribution of the graphical model.

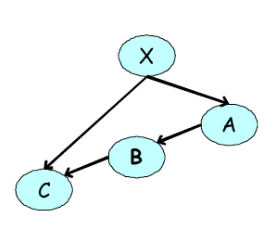

Consider the distribution given by

Suppose we want to marginalize over \(X\):

However, the resulting term \(\sum_X p(X) p(A \vert X) p(C \vert B,X)\) does not correspond to a valid conditional or marginal distribution because it is unnormalized.

For this reason we introduce factors \(\phi\) which are not necessarily normalized distributions, but which describe the local relationship between random variables.

In the above example:

In this case the original conditional distributions are represented by factors over all variables involved. This obfuscates the dependence relationship between the variables encoded by the conditional distribution. Following marginalizing over \(X\) we introduce a new factor, denoted by \(\tau\) over the remaining variables.

Note that for directed acyclic graphical models, who are defined by factorizing the joint into conditional distributions, we introduce intermediate factors to only be careful about notation.

However, there are other kinds of graphical models (e.g. undirected graphical models, and factor graphs) that are not represented by factorizing the joint into a product of conditional distributions. Instead, they factorize into a product of local factors, which will need to be normalized.

By introducing factors, even for DAGs, we can write the variable elimination algorithm for any probabilistic graphical model:

Sum-Product Inference¶

Note

The algorithm presented below is notationally verbose for generality. If you understand variable elimination algorithm presented above, this just makes the procedure precise.

Computing \(P(Y)\) for directed and undirected models is given by sum-product inference algorithm

where \(\Phi\) is a set of potentials or factors.

For directed models, \(\Phi\) is given by the conditional probability distributions for all variables

where the sum is over the set \(Z = X - X_F\). The resulting term \(\tau(Y)\) will automatically be normalized.

For undirected models, \(\Phi\) is given by the set of unnormalized potentials. Therefore, we must normalize the resulting \(\tau(Y)\) by \(\sum_Y\tau(y)\).

Example: Directed Graph¶

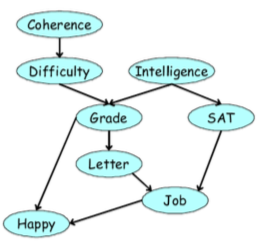

Take the following directed graph as example

This describes a factorization of the joint distribution:

And for notational convenience, we can write the conditional distributions as factors.

If we are interested in inferring the probability of getting a job, \(P(J)\) we can perform exact inference on the joint distribution by marginalizing according to a specific variable elimination ordering.

Example: Elimination Ordering \(\prec \{C, D, I, H, G, S, L\}\)¶

Note again that because our original factors correspond to marginal and conditional distributions we do not need to renormalize the final factor \(\tau(J)\). However, if we started with potential factors not from a conditional distribution, we would have to normalize \(\frac{\tau(J)}{\sum_J \tau(J)}\).

Complexity of Variable Elimination Ordering¶

We discussed previously that variable elimination ordering determines the computational complexity. This is due to how many variables appear inside each sum. Different elimination orderings will involve different number of variables appearing inside each sum.

The complexity of the VE algorithm is

where

- \(m\) is the number of initial factors.

- \(k\) is the number of states each random variable takes (assumed to be equal here).

- \(N_i\) is the number of random variables inside each sum \(\sum_i\).

- \(N_\text{max} = \argmax_i N_i\) is the number of random variables inside the largest sum.

Example: Complexity of Elimination Ordering \(\prec \{C, D, I, H, G, S, L\}\)¶

Let us determine the complexity for the example above.

Here are all the initial factors:

So \(m = \vert \Phi \vert = 8\)

Here are all the sums, and the number of random variables that appear in them

Therefore the largest sum is \(N_G = 4\)

For simplicity, we assume all variables take on \(k\) states.

So the complexity of the variable elimination under this ordering is \(O(8*k^4)\).

(Tutorial) Example: Elimination Ordering \(\prec \{G, I, S, L, H, C, D\}\)¶

This is a variable elimination ordering over \(m=8\) factors each with \(k\) states. The sum with the largest number of variables participating has \(N_\text{max} = 6\) so the complexity is

(Tutorial) Example: Elimination Ordering \(\prec \{D,C,H,L,S,I,G \}\)¶

This is a variable elimination ordering over \(m=8\) factors each with \(k\) states. The sum with the largest number of variables participating has \(N_\text{max} = 4\) so the complexity is